Experiment Launched! Project Midpoint Update

Contest winners, recruitment results, midline survey, and more

It’s been so long since our last post here that I’ve heard some of you thought that the project had died. Not so! Honestly all the action just moved to the Discord as we built out the infrastructure, recruited participants, and got the finalist rankers up. Inevitably, everything took longer and cost more than we had planned — but everything is also working now! In short, here’s where we are:

We have chosen three winning rankers, plus two based on Google Jigsaw’s experimental bridging classifiers.

We finished the software and got all of the experimental arms running

We recruited about 7,000 participants

About 5,000 of these answered the midline survey in the days before the Nov 5 election

Our final survey wave will be in late January. We are not doing any data analysis before then, so we have no results so far. But we have an analysis plan!

The Winning Rankers

The eight finalists were selected back in May by peer review from our panel of judges. We knew that the number we could actually run would depend on how many participants we could recruit. In the end, we picked three.

Heartbreak

Siddarth Srinivasan, Manuel Wüthrich, Max Spohn, Sara Fish, Peter Mason, Safwan Hossain

This algorithm injects content that users likely disagree with from sources they usually agree with (and vice-versa), to study the impact on users’ perceptions of out-groups and inclination to consider other perspectives. This is a test of what is sometimes known as the “surprising validator” theory. The ranker scrapes hundreds of sources on each platform to find personalized posts. The user’s ideological alignment is determined by their answers on the baseline survey, while the politics of each piece of content are determined by an LLM. The top 15% most civic/political posts in each user’s feed are replaced with this surprising validator content.

Feedspan

Luke Thorburn, Soham De, Kayla Duskin, Hongfan Lu, Aviv Ovadya, Smitha Milli, Martin Saveski

The algorithm finds content that is expected to attract politically diverse engagement. It scrapes a variety of sources on each platform including news outlets, politicians, and nonprofit organizations and uses an LLM trained on previous engagement data to predict whether a post is likely to be liked, shared, etc. by people on both the left and right. High quality sources were identified through Media Bias Fact Check’s list of least biased, left-center, and right-center sources augmented with state governors, national legislators, and several other sources such as members of the Bridge Alliance. This resulted in 1130 Facebook pages, 1620 Twitter handles, and 29 subreddits. At serving time, the ranker finds civic content in the participant’s feed and replaces it with this non-personalized bridging content, up to 15% of the total feed.

Personalized Quality News Upranking

Muhammed Haroon, Anshuman Chhabra, Magdalena E Wojcieszak

This algorithm inserts news posts from credible and ideologically diverse news sources which are tailored to a specific user's interests. This increases the user’s exposure to factual public affairs information, with the goal of increasing knowledge, efficacy, political interest, and belief accuracy. We selected 245 verified news and ideologically balanced sources from high reliability source lists from NewsGuard and Ad Fontes. To personalize the feed, both these news posts and posts the user was previously served by the platform are embedded using a pre-trained model, and the nearest K news posts are selected for each user. This matches the topics of the added news to topics that the user is already consuming. These new posts comprise up to 15% of the user’s feed.

Jigsaw rankers

We are also testing two experimental arms based on Google Jigsaw’s new experimental bridging classifiers. These are extensions of the widely-used perspective API that include classifiers for both positive attributes (like curiosity, compassion, nuance, and reasoning) and negative attributes (like insult, identity attack, moral outrage, and alienation). We have one experimental arm that ranks by an average of several positive attributes, and another that ranks by an average of positive attributes minus an average of negative attributes. Both of these simply reorder posts and comments, without adding or deleting anything.

Recruitment

We had originally planned to recruit 1,500 users per arm for each arms plus a double-sized control group. This would be 15,000 users in total. However, we knew that recruitment for a study of this size was a risky proposition and could well turn out to be much more expensive and take a lot longer that we wanted — which it did. Recruitment is certainly one of the areas where we learned the most.

Initially we had budgeted $20 per participant to install the extension. This was based on the cost of a previous smaller study by some of our team which recruited using Facebook ads. However, that study was in 2022 while in 2024, during an election cycle, we found we could not get anywhere near that cost on Facebook, X, or Reddit. Instead we were seeing $50-$90 per install. We experimented with different ad copy and images, signup flows, etc. but we were never able to get good results from ads.

Instead we worked with a dozen market research vendors to recruit people to take a short screening survey to confirm that they used social media on desktop Chrome, then ask them if they wanted to participate in a study which involved installing an extension and keeping it installed for five or six months for $10. We paid the market research vendors a flat rate for each person who said yes. Unfortunately that didn’t mean they became a participant. After saying yes they had to visit the landing page with information on the study, click through to actually install the extension, provide their email, fill out the 10-15 minute baseline survey, and not immediately uninstall the extension.

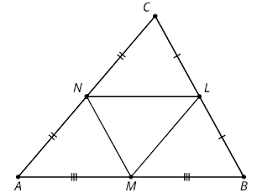

Our recruitment funnel looked something like this:

Suppose 1000 people start the screening survey

From these, 300 qualify and say they are interested, then go to our landing page

Of those, 60 install the extension

Then 30 finish the baseline and keep it installed

Over 250,000 people started the screening survey (!) resulting in over 15,000 installs but only about 7,000 participants. Our total cost per installation ended up being about $50. We are also paying each person $10-$15 directly for participating. So that’s $60-$65 per participant.

On the bright side, we have learned so much about recruitment, survey response rates, and generally how to run such a large field experiment that we will be publishing a separate methods paper to share our knowledge (including more precise figures on the above). This kind of experiment is expensive, but we think we know how to make it cheaper — perhaps half as much per participant with good planning, good infrastructure, and amortization of a panel across multiple studies.

Midline Survey

Our original plan was to start the experiment in July and run it for four months prior to the election, with a midline survey at the beginning of September and a final survey just after the election. But it just took way longer that we had hoped to a) get volume recruitment going at a price we could afford and b) finish developing our complex software stack. So we pushed everything back two months.

This means recruiting started in July and finished in September, we turned on the experiments proper between September and October, and the midline survey was scheduled for the week before the election. We chose to get answers before the election because we figured the election might change everything and swamp any earlier effects from the experiment.

To get maximum response rate we designed a sequence of messages every day or two over the week before the election, via email and browser notifications, our two potential communication channels. We offered $5 until November 3rd, then upped it to $10 for people who hadn’t yet answered. We had a fully automated pipeline which sent all messages on a fixed schedule (emails via Postmark) and paid people via gift cards, Paypal, or ACH (via Tremendous). Sending cash with code is scary, and we implemented a ton of anti-fraud measures including making sure that the user had actually used the extension at some point since October 1st. It’s always a little complex to answer the question “how many users do you have?” but this is how we counted for the purpose of the midline, where we saw about 6,500 users.

And all of this worked. We got over 5,000 responses to the midline survey, which is about a 77% response rate. This is quite good!

We probably would have gotten an even better response rate if we hadn’t had a bug in our code that only sent emails to a third of our users until we discovered it on the morning of Nov 4th. We built all of this mission-critical code so very carefully (unit tests, careful exception reporting, observability and alerts) and still… software is hard.

What’s Next

I don’t want to jinx anything by saying we’ve gotten past the hardest part, but we’ve definitely retired the lion’s share of the risk on the project. The software all works, even though it was late and really expensive. We recruited a huge number of participants, even though it was late and really, really expensive.

The number one question we’re getting at this point is: is the experiment working? Are people becoming less polarized and better informed? We don’t know! We haven’t looked at the data at all yet, in part because we’ve only just gotten the November midline data and we’ve been really busy, but also because we have pre-registered the experiment and don’t want to peek at the data. We’ll see what we get when we analyze according to our protocol in January.

Basically, it’s a big difference-in-differences analysis on our dependent variables. These include measurements in three areas (polarization, news knowledge, and well-being) using three different methods (regular surveys, in-feed surveys, and behavioral data). We’ll also have an incredible observational dataset: what nearly 3,000 Americans saw and did in the three months before and three months after the 2024 election. We have every post and every reaction on Facebook, X, and Reddit (at least on desktop). We are working with some of our colleagues to figure out how to best exploit the incredible scientific potential of this data.

At this point we expect three papers from this work: one with the main experimental results, one with observational results, and a methods paper which explains what we learned about recruitment and software. (For a related methods paper, see friend-of-the-project Tiziano Piccardi’s report.)

Thanks, and do you want to run your own experiment?

By the time you add up the core team, everyone involved in the winning rankers, everyone involved in the rankers that didn’t win, engineering staff, administrative staff, and all of our advisors and judges, about 50 people have been working on this project. Many more if you count institutional support staff and vendors. We thank each and every one of you. And of course, we thank our funders. This project has cost almost a million dollars, all of it donated without strings attached in support of basic science. If there was any cheaper way to do this, we surely would have done it.

As for the future, the PRC software may prove to be a huge asset. It cost over $400,000 to produce and it’s hugely capable — it’s the only software we know that can add, remove, and reorder items using a modular API on multiple platforms, in real-time and without platform permission. We’ve even got a proof-of-concept for how to do this on Android phones. Here’s a primer on what the PRC software can do. If you’d like to do an experiment where you change what people see on social media (or related platforms like search engines), please get in touch! Only by working together can we afford to do the science needed to answer society’s most pressing questions about technology.