What Might Work: A Guide to Previous Research

Try short-term outcomes, bridging-based ranking, and adding content

We are now less than one month away from the first-round submission deadline. Have you decided which outcomes you would like to try to move, and how you’re going to try to move them? Here is a very brief guide to some previous research that contains important hints about what is likely to work. You can assume our judges are familiar with this background.

The broadest outcomes are hardest to move

You may want to get familiar with the Facebook 2020 election studies. These tested a variety of things including comparing chronological to algorithmic ranking, and increasing the amount of politically cross-cutting content participants’ feeds.

The latter study is a strong test of the “filter bubble” hypothesis, the idea that polarization results from a lack of exposure to politically diverse opinions. So what happened to political attitudes when people were shown more cross cutting content for three months? Basically nothing.

Similarly, in 2019 Instagram did a large experiment hiding like counts on posts, which we now know about through leaked documents. The idea was to reduce “negative social comparison” which is thought to contribute to anxiety and depression, especially for young people (our study is 18+ only though). Hiding like counts reduced the number of people reporting negative social comparison by 2%, but did not significantly move general measures of well-being.

The more narrowly targeted the measure is, the easier it is to move. One big challenge with trying to move general political attitudes and well-being is that most of the user’s life doesn’t happen on social media, so the maximum effect of changes to social media is limited.

Then why do we include broad measures at all? First of all, we want to check for unwanted side effects of each algorithm. More importantly, there are cases where these outcomes do change. In one experiment, asking users to subscribe to cross-partisan news sources decreased affective polarization by a few percent. An experiment changing what people watched on TV also moderated political attitudes. Deactivating Facebook entirely for four weeks improved an index of well-being — but it also reduced news knowledge.

These broad outcomes are are difficult but not impossible to move. This is why the challenge is, well, a challenge.

There’s not that much civic content

We expect many submissions to primarily target content on political, civic, or health topics. For example, this is where most misinformation is. However, it’s important to remember that for most people, this is only a small fraction of what they see. There are exceptions, like particular subreddits. But news is about 3% of users’ Facebook feeds globally, while one of the Facebook 2020 U.S. studies above mentions that “civic and news content make up a relatively small share of what people see on Facebook (medians of 6.9% and 6.7%, respectively)”.

This means that if you only uprank/downrank/delete such content, the maximum “dose” your algorithm can deliver is quite low. Which leads us to…

Adding posts can be really powerful

Short interactions can have large effects on polarization and related attitudes. The Strengthening Democracy Challenge (which was a major inspiration for us) tested 25 “interventions” that people could complete online in eight minutes or less. These were mostly quizzes and videos. That research found that these could change political attitudes, and those changes were still pretty strong two weeks later.

Another very interesting type of algorithm is bridging-based ranking, which attempts to select content that will be popular on both sides of a political divide. Diversity of engagement turns out to be a strong signal of quality, and can reduce exposure to misinformation, extreme views, harassment etc. This is the type of algorithm that powers Twitter’s community notes, and something of this sort is also in use at Facebook.

On the other hand, there just may not be that much content that bridges political divides in participants’ feeds. But you could find that content elsewhere and add it in using the API. Nothing is stopping you from publishing that content yourself. You could even partner with an organization that wants to write and promote such bridging content. Use a scraper to tell your ranker when new content is available.

In-feed surveys are the easiest to move

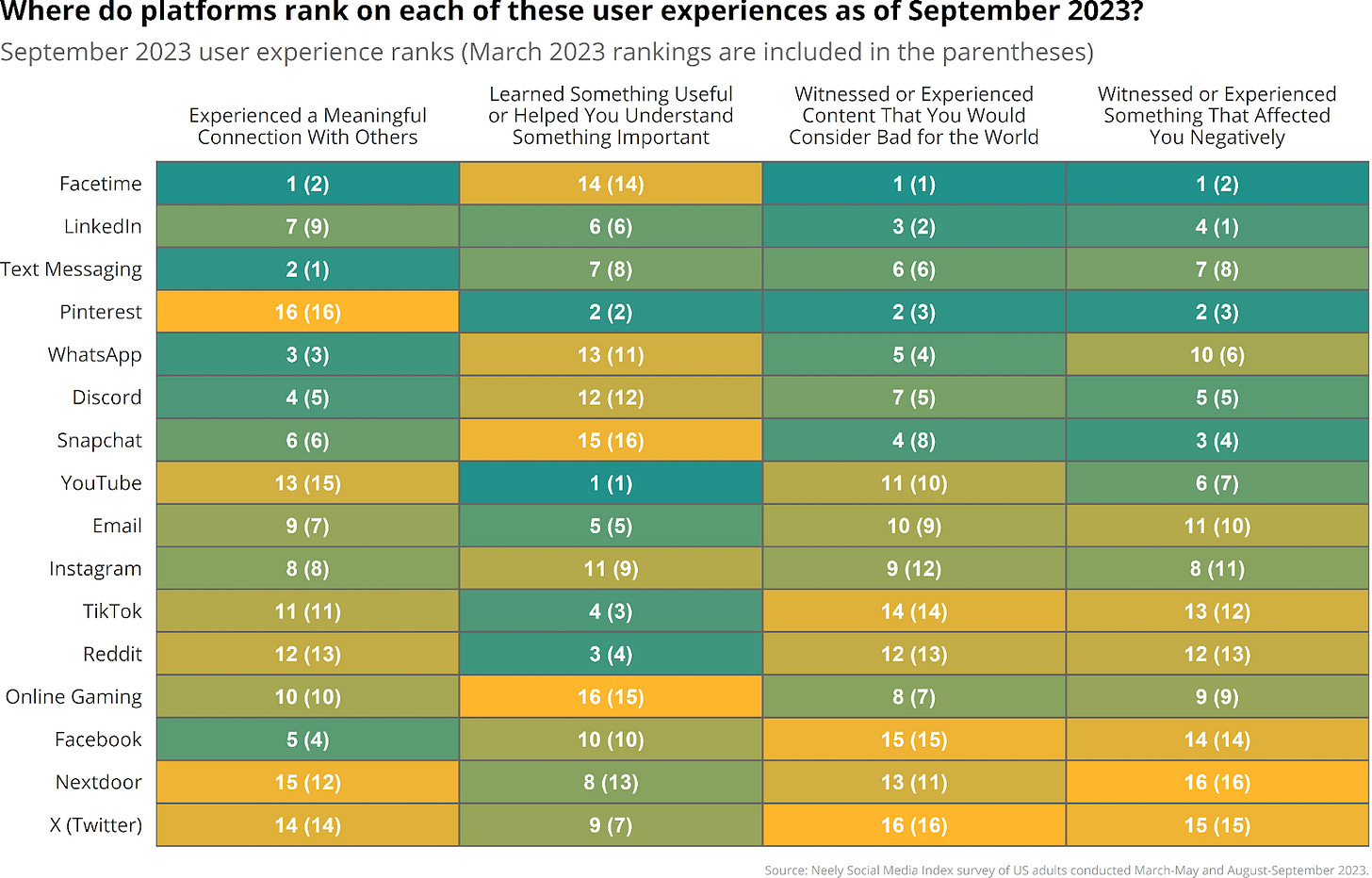

We will be measuring a number outcomes using in-feed surveys, short questionnaires that appear on the page like a poll with 1-3 questions. While broad outcomes and attitudes are hard to move, experiments at platforms have found that measures of on-platform experiences can be changed much more easily. For example the Neely Center at USC tracks four different types of experiences across platforms, modeled on questions originally used in internal Facebook surveys.

We’ll be asking these four questions, plus questions about a person’s recent emotional experience, and a few polarization questions. Seeing just a few tweets can change someone’s emotions or make them feel worse about their political outgroup. Political attitudes like support for undemocratic tactics have also been shown to move in the short term.

While we are ultimately looking for algorithms that have long term effects, changes on just one platform (or even our three platforms) may be overwhelmed by all the other media we consume. This may be why most experiments testing the persuasive power of media show small effects. This means that when long term effects do occur, they must be from repeated exposure to messages that cause short term effects. The good news is that these shorter term effects do appear, and are measurable.

Engagement and exposure

We will be measuring exposure — what people actually see — in several ways. For example, we will measure how much political content people see, the ideology of that content, and an information quality index which will measure the fraction of misinformation in people’s feeds.

Exposure is probably the easiest outcome to move, because you have direct control over what people see. You could, for example, remove all content from “low quality” domains which are likely to publish misinformation (there are various lists).

Remember, though, that we will also measure engagement. The easiest way to prevent people from seeing “bad” posts is to make the feed so boring that they stop using the product. You can make people eat their vegetables to a certain extent, but not an unlimited amount, and if your algorithm significantly reduces engagement then it probably won’t be useful in practice — neither users nor companies are going to like it.

Some small loss of engagement is likely outcome for higher quality feeds though, because in many (not all) situations there is a negative correlation between content quality and engagement. For example, talking negatively about the outgroup (“those people are so terrible!”) really does get people to click.

Your ranker will get the previous engagement on each post (previous likes etc.) and you could use this with standard recommender algorithms try to predict future engagement, so you could certainly rank posts by predicted engagement. But this is what platforms already do, so consider not doing it — try ranking by content and other signals instead. Here’s a whole paper on using non-engagement signals in content ranking.

Don’t forget: you can add up to three questions

We don’t guarantee that we will include all the questions that each winning team wants, but convince us. More here. Choose interesting variables and convince us (by citing previous research) that you can move them.